By Luis J. Diaz, Maria C. Anderson, John T. Wolak and David Opderbeck[1]*

Abstract

Cloud computing can be a highly effective means of avoiding information technology costs and are an attractive option to higher education institutions. Cloud computing also creates an incremental potential risk for data breaches and the accompanying privacy concerns that arise when personally identifiable information is stored on third party servers accessible over the internet. Officers and board members of an institution considering a move to the cloud are well-advised to engage in robust diligence and be adequately informed of the benefits and risks of migrating substantial amounts of sensitive data to the cloud. This article provides timely information to higher education institutions to assist the understanding of the nature of cybersecurity risks and preparedness, and how those risks may be mitigated so that the fiduciary duties owed by institutional officers and board members are properly discharged.

I. Introduction

Technological innovation now makes it possible to conduct business at the speed of thought. The resulting mass of data resulting from the “internet of things”[2] is stored on remotely-connected servers located throughout the world. While the benefits of this innovation revolution undoubtedly benefit society, business, and institutions of higher education, it also creates incremental risks in the form of data breach disasters when personally identifiable information (PII) and other sensitive information about customers, employees, and business partners is inadvertently disclosed.

Today, the news is filled with horror stories of such data breach disasters at some of the world’s leading organizations. It seems that no one is immune from a data breach. In the aftermath of such an event, stock prices can plummet, public opinion shifts, and officers and directors can be terminated for failure to exercise best judgment in monitoring and mitigating those risks. The recent breaches at Target Corp.[3] and Parsippany, New Jersey-based Wyndham Worldwide Corp.[4] exemplify the tsunami of litigation that is likely to result when a major breach occurs. But, this is just the beginning as the duty of officers and directors relating to these global economy realities is just beginning to evolve. With the changing standards now emerging in the case law, it is reasonably foreseeable that there will be many more data breach related lawsuits in the future. As evidence of this fact, the Securities and Exchange Commission issued guidance in 2011 that it deems technology and privacy breaches as potentially material. SEC Chairwoman Mary Jo White has said that cyber threats are “of extraordinary and long-term seriousness. They are first on the (SEC’s) division of (market) intelligence’s list of global threats, even surpassing terrorism.”[5]

In light of these new world realities, officers and directors at all types of organizations, including colleges and universities, would be well advised to ensure that their organizations engage in a thoughtful process to implement adequate physical, electronic, and other security measures to prevent, manage, and respond to data breaches. The failure to do so can result in what happened at Target, where seven of ten directors were unseated because they failed to adequately manage cyber risks. Aside from the risk of breach-related litigation, it is also reasonably foreseeable that both federal and state regulators will become increasingly more aggressive in terms of regulatory compliance, fines, and monitoring activities.

Higher education institutions and their officers and directors are not exempt from these obligations. Many state laws impose a fiduciary duty upon boards of governors or trustees and administrators of public and private universities that require engaging in a robust due diligence process to ensure that cyber risks are properly identified and managed. This article seeks to provide some practical guidance concerning the federal and state laws applicable to higher education, and how officers and directors at these institutions can implement adequate policies, procedures, and practices to mitigate cyber risks and threats relating to potential data breaches.

II. Director and Officer Fiduciary Duties in the Face of Cyber Security Issues

Public awareness of director and officer liability for cyber attacks was elevated after a breach of consumer records at Target.[6] In reliance upon case law recognizing a board’s obligation to oversee corporate risk post-Target, commentators suggested that liability for failure to monitor cyber-risk could be imputed to individual board members who were not discharging their fiduciary obligations by either: (a) “utterly” failing to implement “any reporting or information system or controls”; or (b) if such reporting or information systems were in place, consciously failing to monitor or oversee them so that board members were “disabled from being informed of risks or problems requiring their attention.”[7] Therefore, University officials should be mindful of the legal risks posed to the members of their governing boards by ensuring they take an active role in the assessment of risk associated with information security systems selected for implementation and are regularly updated through reporting systems.[8]

In the United States, there are a multitude of sources that may impose liability upon board members for lapses in judgment related to cyber security. These sources may be found in federal laws – such as the Fair Credit Reporting Act, the Sarbanes-Oxley Act, the Health Insurance Portability and Accountability Act (HIPAA), Family Educational Right to Privacy Act (FERPA), and the Federal Information Security Management Act (FISMA) – or state and common laws. Potential plaintiffs include the Federal Trade Commission, the U.S. Securities and Exchange Commission, the Department of Justice, state attorneys general, and the individuals or companies whose data has been breached.[9] Higher education is particularly vulnerable to data breaches because, as the U.S. Department of Education has noted, “[c]omputer systems at colleges and universities [are] favored targets because they hold many of the same records as banks but are much easier to access.”[10]

In a survey conducted by the Association of Governing Boards of Universities and Colleges and United Educators found that, while full boards have been increasingly engaged in risk discussions, “conflicting answers on the amount and quality of information boards receive on risk raised questions about the value of that information.”[11] While 60 percent of respondents to that survey reported that the information boards received – particularly in connection with financial risks – was adequate, only 39 percent strongly agreed that enough information was shared to fulfill their legal and fiduciary duties.”[12] Accordingly, because the failure of a board to actively address cyber risk management and information security risks can impose liability upon individuals,[13] members of governing boards must be provided adequate information in order to discharge their fiduciary duties.[14]

Although the Sarbanes-Oxley Act has limited application to higher education, it has raised expectations of accountability in governance, regardless of whether a governing board manages a corporation or not-for-profit institution.[15] Members of not-for-profit governing boards who fail to meet the expectations of this Act may find themselves subject to removal or may not be indemnified in the event of suit by affected students, alumni, or employees.[16] Board members of not-for-profit institutions, whether public or private universities, may be subject to director and officer liability suits for failing to discharge their duties by broader classes of plaintiffs that may include other board members, donors, employees, students, vendors, contractors, other not-for-profit entities working in collaboration with the institution, and/or government agencies with regulatory authority over the institution.[17] While suits based upon such causes of action have thus far largely settled or been dismissed based upon failure to demonstrate causation or damages related to identity theft, suits continue to be filed, and the technological capacity to identify the use of such information continues to develop and requires constant monitoring to evaluate its evidentiary potential in damage claims.[18]

Governing boards of higher education institutions are commonly referred to as “the guardians” of the university and, as such, owe fiduciary duties of care and loyalty similar to their counterparts at for-profit corporations.[19] The degree of their fiduciary obligations vary, depending upon the institution’s bylaws. However, as a general rule, board members must promote the institution’s best interest, disclose to fellow board members any material information that may not be readily known, and exercise good faith duties of care and loyalty toward the institution.[20]

A. The Duty of Care

The duty of care relates to the governing board member’s competence in performing his/her functions and requires the use of care that an ordinarily prudent person would exercise in a like position under similar circumstances.[21] The duty of care also requires the board member to exercise his or her responsibilities and decision-making in good faith and with due diligence.[22] The duty of care does not allow a board member to fail to supervise the organization or, even when acting in good faith, neglect to make informed decisions.[23] Finally, the duty of care requires that board members are well-equipped with information that is required in order to make informed decisions.[24] A recent survey found that only 12 percent of board members frequently receive briefings and reports on cyber-threats.[25] If a board member is not regularly informed as to the institution’s cyber security policies, procedures, and risks, he or she may not effectively oversee or approve institutional initiatives that may result in a breach of the duty of care.[26]

B. The Duty of Loyalty

The duty of loyalty requires a member of a governing board for a higher education institution to act in what he or she reasonably believes to be in the best interests of the organization, in light of its stated purposes.[27] This requires the trustee to affirmatively protect the interests of the organization and to refrain from doing anything that would be injurious to the organization.[28] The duty of loyalty requires the board member to place the interests of the institution above his or her own, and is largely concerned with addressing direct or indirect conflicts of interest between the board member and the organization.[29] As with the duty of care, the vast majority of state laws provide that board members of a not-for-profit are subject to a duty of loyalty, just as board members of a for-profit corporation are.[30]

III .Summary of Legal Obligations to Facilitate A Board’s Duty of Care and Loyalty

A. The Applicability of FERPA, HIPAA, and FISMA to Higher Education

Higher educational institutions must comply with FERPA,[31] FISMA,[32] and, if applicable, HIPAA,[33] in order to regulate the security of their student records or other data.[34] FERPA sets the standard for student privacy, and federal funding may be withheld from any institution with a policy or practice of disclosing student information without authorization.[35] Because FERPA ensures that the privacy of student educational records[36] is protected by regulating to whom and under what circumstances such records may be disclosed, its provisions have important application when those records are shared with cloud software services providers.[37]

Directory information may be made public after an institution gives notice of the categories of directory information to all students and provides students an opportunity elect to keep such information private.[38] Non-directory information is all other information related to a student and maintained by a higher education institution, including, without limitation, social security numbers or student identification numbers.[39] The disclosure of non-directory information or PII to a third party is only permitted if it qualifies as one of FERPA’s defined exceptions.[40] Faculty, staff, and other officials of the institution may access non-directory information under FERPA if they have a legitimate academic interest to do so.[41] The school official exception applies to third party cloud providers who are given access to student education records regulated by FERPA[42] so long as they agree: (1) to not redisclose the information without the student’s prior consent,[43] and (2) to use the information only “for the purposes for which the disclosure was made.”[44]

Higher education institutions providing academic programs that include the operation of medical hospitals or other treatment centers and submit claims for reimbursement of medical expenses to third parties are generally subject to HIPAA.[45] HIPAA requires a receiving party to maintain the confidentiality of protected health information (PHI) that includes individually identifiable health information[46] transmitted by, or maintained in, electronic, paper, or any other medium.[47] The HIPAA Privacy Rule requires that a covered entity maintain reasonable and appropriate administrative, technical, and physical safeguards to protect PHI privacy.[48] The Privacy Rule also requires covered entities to enter into business associate agreements with third party vendors who create, receive, maintain, or transmit PHI on their behalf.[49] Under the Privacy Rule, covered entities may only use or disclose PHI without patient authorization for treatment, payment, or health care operations.[50] For other purposes, a covered entity must obtain patient authorization prior to using or disclosing PHI, albeit subject to certain exceptions.[51]

In addition, and pursuant to HIPAA, a national security standard for the protection of individually identifiable health information was established (“Security Rule”).[52] The Security Rule regulates electronic PHI (ePHI) and requires any entity subject to it to adopt policies and measures to ensure the confidentiality, integrity, and availability of any ePHI created, received, maintained, or transmitted.[53] As with FERPA, covered entities must also enter into written agreements with third parties who create, receive, maintain, or transmit ePHI on their behalf that are consistent with the obligations under the Security Rule.[54] Consequently, if a higher education institution is subject to HIPPA and intends to use cloud computing to manage its ePHI, the written agreement with the third party vendor must be drafted to protect the institution from liability from improper disclosures.

Notably, the Security Rule anticipates that covered entities will be permitted some “flexibility” in their approach to implement security protocols.[55] As part of that flexible approach, covered entities are required to consider the following factors: (1) the size, complexity, and capabilities of the covered entity or business associate, (2) the covered entity’s or business associate’s technical infrastructure, hardware, and software security capabilities, (3) the costs of security measures, and (4) the probability and criticality of potential risks to electronic protected health information.[56] Penalties for violations of HIPAA can be severe and may include criminal charges as well as significant civil penalties.[57]

B. State Laws and Data Security

In the United States, there is no comprehensive, uniform set of laws in either the federal or state systems to regulate data privacy and the collection, use, and disposal of personal information.[58] There are, however, hundreds of privacy and data security laws that govern the collection and use of personal information, all with varying obligations and degrees of scope.[59] States have individual data privacy and security laws directed toward the protection of student or employee PII.[60] For example, many states have adopted laws that govern the collection, use, and disclosure of Social Security numbers, and other states such as California, New Jersey, and New York have enacted laws requiring the proper disposal of records that contain personal information.[61] Additionally, some state laws are more stringent than the protections afforded by HIPAA and are not preempted by federal regulation, so long as the state’s laws are not inconsistent with the federal regulatory scheme.[62]

C. Cyber Security Compliance in Higher Education

Congress has debated comprehensive cyber security legislation since at least 2009.[63] Earlier proposals would have included a mandatory federal framework for cyber security compliance.[64] Later proposals have stressed voluntary public-private partnerships with liability protections and other incentives for compliance.[65] Comprehensive reform, however, has stalled in Congress for a variety of political and practical reasons.[66]

In February 2013, frustrated with Congress’ inability to pass comprehensive cyber security reform, President Obama issued Executive Order 13636, “Improving Critical Infrastructure Cybersecurity.”[67] This Order directed the National Institute of Standards and Technology (NIST) to develop a framework for cyber security compliance by owners and operators of critical infrastructure, although the Order does not impose any specific legal obligations on non-governmental entities.[68] NIST released its framework in February 2014, and it has become recognized as a “gold standard” in cyber security compliance.[69]

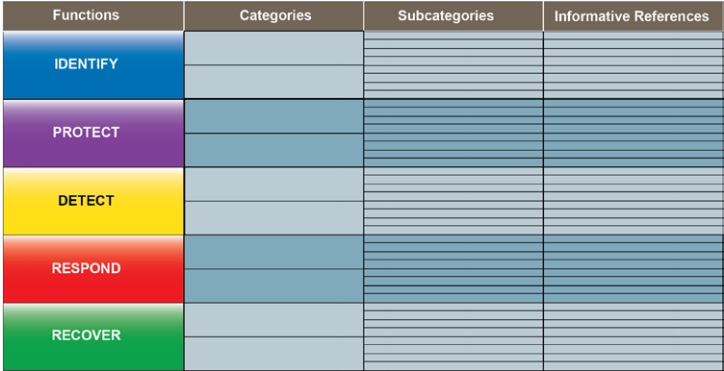

The NIST standards are arranged around what NIST calls the “Framework Core.”[70] The Framework Core identifies high-level cyber security functions, divides those functions into categories of outcomes, and relates the categories of outcomes to specific subcategories and informative resources:[71]

As the graphic from the NIST Framework illustrates, the core functions are “Identify,” “Protect,” “Detect,” “Respond,” and “Recover.”[72] If these core functions seem obvious, that is because they are in a sense obvious. The NIST Framework does not break any new ground concerning the basic requirements to prepare for and respond to cyber attacks. Rather, the Framework seeks to require organizations to think systematically and carefully about cyber risk. Surprisingly, even large organizations with significant information technology assets and professional IT staff often fail to engage in this kind of deliberate risk identification and planning.

The “Identify” function requires the organization to take an inventory of all of its “systems, assets, data and capabilities.”[73] The “Protect” function requires the organization to proactively develop safeguards to keep critical infrastructure services online in the event of a cyber emergency.[74] The “Detect” function requires the organization to implement procedures and technologies to identify adverse cyber security events,[75] including continuous, around-the-clock monitoring of security status and robust processes for detecting intrusions.[76] The “Respond” function focuses on containing the impact of adverse events;[77] this function recognizes that adverse cyber security events are inevitable despite robust protection and detection mechanisms, and the risk of such events cannot entirely be eliminated but often can be contained. The category responses under this function are among those most frequently overlooked in cyber security risk management. Finally, the “Recover” function requires plans to restore information capabilities lost during an attack. The category responses under this function should include restoration plans with definite timelines as well as plans to learn from the event and make improvements in the protect, detect, and respond functions.[78]

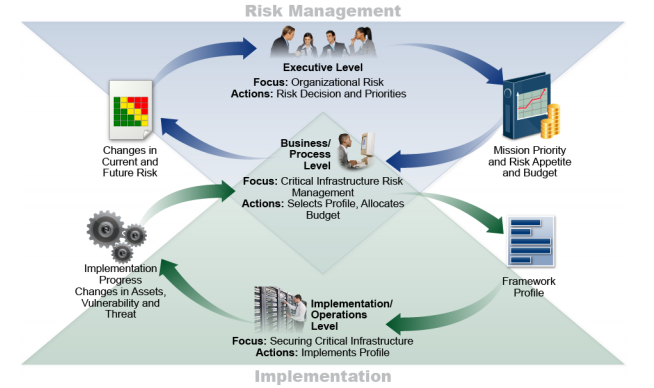

The NIST Framework includes a tier structure that enables an organization to assess its current state of compliance and to move towards higher levels of compliance.[79] A vital measure of which tier an organization has reached involves the formal approval and adoption at a policy level of organization-wide cyber security risk management practices. This means that cyber security should become elevated to a top institutional priority that entails functions across all business units from the executive level down. Cyber security is no longer an afterthought for only a few information technology functions. The following graphic from the NIST Framework illustrations this dynamic:[80]

Again, there is nothing particularly novel in this structure, but it illustrates that cyber security must become an executive level issue that receives constant attention and, importantly, budgeting.

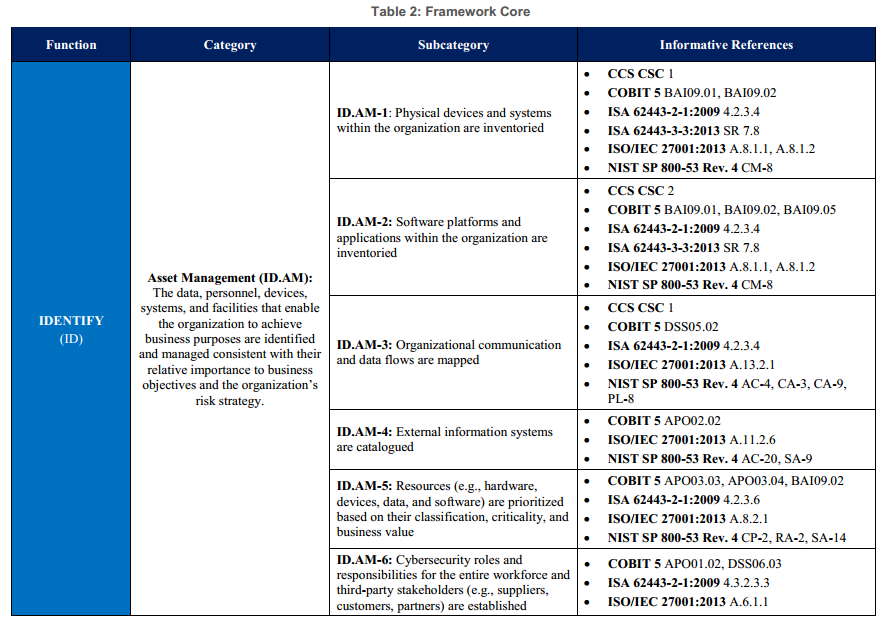

Appendix A to the NIST Framework includes a coded tool that can be used to conduct a cyber security compliance assessment[81] in a methodical, standardized fashion, providing codes for specific subcategory designators and identifying specific published standards relating to each subcategory.[82] For example, here are the cells for the first function, category, and subcategory:[83]

Obviously, with 14 pages of such detailed mappings within Appendix A to the NIST Framework (pages 20 to 34), the work involved in becoming compliant can seem impossibly daunting.[84] Moreover, some of the standards referenced in the NIST framework may not map directly onto the unique circumstances of higher education institutions. For these reasons, some universities and university trade organizations have adopted or proposed simplified models that focus on particular standards.

For example, the Higher Education Information Security Council (HEISC) has published an Information Security Guide keyed to the ISO/IEC 27002:2013 standard, which is one of the standards referenced in the NIST Guidelines.[85] The HEISC Guide incorporates 15 compliance domains, ranging from cryptography to supplier relationships.[86] As another example, the University of Ohio Information Risk Management Program condenses the NIST Framework into 30 risk areas within seven business functions, and condenses the text into eight pages.[87] The business functions identified in the University of Ohio policy include management, legal, purchasing, human resources, facilities, and information technology.[88]

Other universities, colleges, and higher education providers similarly may benefit from information security planning that customizes the NIST Framework for application within their specific circumstances. Although cyber security compliance policies can become complex at the granular level of application, they all include some basic over-arching themes, including the following:

- Cyber security compliance involves more than adherence to a specific legal requirement. It includes multiple legal requirements as well as contractual obligations and institutional risk management practices.

- Cyber security compliance is an ongoing process, not a one-time project.

- Cyber security compliance involves both technological measures and human resource management measures.

- The risks of a cyber security incident cannot be entirely eliminated. Cyber security compliance therefore involves procedures to identify and remediate incidents as well as procedures aimed at preventing incidents.

- Cyber security compliance is an executive-level concern that requires coordination across every significant operational unit in the organization.[89]

These general principles are as true for higher education institutions as they are for any other kind of enterprise. Indeed, the wide variety of sensitive data handled by higher education institutions, including sources as diverse as confidential and trade secret technological research and student health information, together with the diffuse nature of governance in many university systems, suggests that such institutions must make particular efforts to develop comprehensive, meaningful cyber security compliance programs.

Finally, in addition to these overarching compliance themes, public attention recently has focused on legislation that would facilitate information sharing about security risks between the public and private sectors. The Cyber Information Sharing Act (CISA) was signed into law by the President on December 18, 2015 as part of the omnibus spending bill.[90] The CISA allows private entities to share cyber threat information with the federal government without incurring liability under other laws – such as, for example, FERPA and HIPAA – that require certain information to be kept confidential.[91] The new law apparently would include colleges and universities, as well as their officers, employees, and agents.[92] Information sharing proposals have been very controversial with cyber civil liberties advocates.[93] Now that the CISA has been signed into law, colleges and universities will need to think carefully about procedures for logging potential threat information and for whether and when an employee or officer should report such information to the federal government.

However, the recent onslaught of cyber security cases does not require board members to become experts in cyber security risk. In looking to the Wyndham, supra, case for guidance, there are several actions that the board can proactively take in advance of a cyber security event, which include making data privacy and data security regular topics of discussion at board meetings; providing that a specific committee has primary oversight on data security and ensures that data protection measures are discussed regularly at committee meetings; periodically retaining third-party consultants to assess the institution’s cyber security practices and remediating any deficient areas; and establishing a cross-functional incident response team that has primary responsibility for investigating and responding to a cyber security breach.[94]

IV. Risk and Mitigation

Through a comprehensive risk analysis, a University’s board of governors or trustees and administrators can ensure that organizational cyber risks are adequately mitigated through a combination of effective diligence, contract negotiation, and, in many instances, the purchase of cyber insurance coverage. These steps are necessary to provide effective governance and management of the university. Cloud vendor contracts are not yet associated with the typical collateral issues that are raised in outsourcing or shared control contracts. These models offer worthwhile guidance about risks created by shared responsibilities and possible liabilities, as well as ways to contract around common problems. As recent large-scale cloud failures demonstrate, a breach results not only in data recovery problems, but also in attendant unfavorable publicity and extensive remediation and legal costs.[95]

A. Overview of Risks Associated with Cloud Computing

Cloud computing offers both benefits and risks that must be weighed. Educational institutions have employed cloud computing for a variety of needs, from hosting of simple applications to complex, enterprise-wide human resources and student information management systems.[96] Cloud computing frequently offers granular pricing that lets institutions optimize software or services utilization and tailor the same to meet the needs of students, alumni, or employees.[97] Moving system architecture to the cloud reduces the long-term costs of IT resources while increasing employees’ and students’ “anywhere, anytime” access to the resources the institution selects for common availability.[98] Resources hosted remotely are necessarily flexible, potentially including infrastructure, platforms, or even stacked software as a service, and these options offer cost savings through economies of scale, off-site hosting, and off-site maintenance.[99] The cloud’s modular, on-demand model permits educational institutions to reduce the sunk costs of quickly outdated hardware or data storage and to easily swap out software on a global level for more recent applications.[100] By enabling faster updates, with no delay for procurement or individual installation, the institution can more efficiently serve its various stakeholders while reducing overhead costs.[101]

Against these benefits, decision-makers must educate themselves about the associated cyber risks in order to exercise sound judgment before migrating PII to the cloud. The use of cloud computing forces an institution to rely on the policies and security of a third party vendor (and any affiliated data center utilized by the vendor), which creates incremental organizational risk that must be analyzed as compared to the inherent risk of the institution managing its own data and IT resources.[102] Here, we analyze the risks associated with the most common cloud service offered by vendors, that being public, multi-tenant cloud services, where remote data centers host multiple customers’ data on the same servers without segregation.

As stated earlier, cloud computing creates incremental risk by outsourcing an institution’s IT functions to third party vendors, which eliminates or impairs the institution’s control over its data, processing, and security.[103] This increased risk and the resulting increased liability from a breach by a third party vendor are frequently borne directly by the institution itself.[104] These new risks must be analyzed in addition to the familiar vulnerabilities associated with IT functions, such as cyber security threats from networked mobile media, hardware malfunction, software installations, and malicious insiders or external cyber attacks. As a result, some institutions, particularly those with a larger volume of PII, trade secrets, or confidential data subject to high levels of regulation (i.e., under HIPAA requirements, Department of Defense procedures, or SEC oversight), may choose to avoid cloud computing because the additional risks, requirements, and potential exposures are too great.[105] Alternatively, such institutions may choose to create private, self-contained cloud computing systems to increase the level of control retained over the security of the data centers.[106]

Other educational institutions, particularly smaller schools with more limited data sets, may find it is both safer and economically efficient to rely on the more advanced security provided by larger cloud vendors.[107] However, even these schools must ensure that such vendors can comply with the “school official exception” under FERPA.[108] For these smaller institutions, the incremental risk created by outsourcing the security of their student data is offset by the net benefits to overall security offered by more advanced security systems than those the smaller organizations can individually afford. Larger educational institutions with more robust security processes will have to find other benefits and methods of risk mitigation to offset the incremental risk and craft a positive net benefit bargain by switching to cloud computing.[109]

Universities may also face different risk levels depending on whether they are public or private institutions. With different appetites for risk or different security risk profiles, each institution must achieve an acceptable balance of risk against benefit by identifying the incremental risks associated with cloud computing that are germane to their programs and then finding ways to mitigate those risks.[110] Some of the risks that require consideration include:

- Educational institutions remain legally liable for data breaches, even though control over security shifts to the cloud vendor. Accordingly, data breaches can leave the institution subject to different laws for each jurisdiction implicated, by the location of either the data, compromised employee, student or alumnus/a, or cloud vendor’s citizenship.[111]

- Any single breach may put a cloud vendor out of business or in bankruptcy, while for young or small vendors, lack of significant assets and limited applicable or available insurance coverage may preclude full recovery of losses.

- PII may be compromised or commingled with third party data, including that of competitors, with respect to the university’s research or intellectual property.[112]

- Cloud vendors may impose unreasonable or otherwise unacceptable policies or terms of service, including: failure to provide adequate indemnity for claims resulting from security breaches; failure of transparency regarding third party data center security; limitation of liability to amounts inadequate to meaningfully remedy the loss; exclusion of consequential damages; refusal to limit future use of client data; refusal to secure client consent before transferring data overseas; refusal to provide service level agreements or damages for disruption during outage; refusal to return data in usable form to client after termination of agreement; or refusal to agree to abide by FERPA’s “school official exception” as it relates to direct control or the use and redisclosure of PII.[113]

- The physical location of cloud vendors’ servers around the world may result in trans-border information flow and could subject information to the laws of multiple foreign jurisdictions.[114]

- Cloud computing makes it difficult to administer enterprise-wide information security policies for risk mitigation, as well as resource mapping procedures for data forensics, preservation, and management.

- Because sensitive personal, financial, and other confidential information may be stored on the cloud vendors’ servers, risk of breach, loss, or liability must be analyzed in terms of publicity as well as the financial and legal consequences. Cyber attacks directed at cloud vendors may impact a large population of unrelated users and generate greater publicity.

Cloud vendors are reluctant to assume significant risks or the resulting liability because the pricing models are kept low through contractual provisions limiting liability and avoiding indemnification for breaches of data availability, security, or privacy.[115] While weighty bargaining power or competitive leverage can aid in bringing cloud vendors to the bargaining table to negotiate risk-sharing, these advantages likely will not be available to individual universities or smaller higher education nonprofits.[116] Because few institutions can individually lay claim to those bargaining advantages, universities may consider pooling resources and forming consortiums to collectively bargain with vendors, share the costs of due diligence, and secure insurance. Due diligence in determining which risks are the most vital remains the best method to shore up bargaining positions, as can be seen below.

B. Best Practices for Higher Education When Considering a Move to the Cloud

When an institution of higher education intends to make the strategic decision to move its data and information technology systems to a third party cloud provider and procure software as a service, it should first establish a team of stakeholders. The team should include the institution’s general counsel; the highest ranking officials charged with overall authority to oversee information technology and security, risk management, finance, and business administration; and the head of the business unit that will utilize the technology. These stakeholders should participate in the due diligence of the software service providers and the negotiation of their contracts, so that they are fully informed of and understand the nature of the risks to the institution by moving to a cloud environment. By involving key stakeholders in this manner, the institution will achieve consensus in making recommendations to its president and governing body to approve the individual contract and use of the particular technology resource, as well as to ensure that there is fully informed consent to the risks inherent in this type of transaction and that techniques have been developed by the institution to mitigate them.

The information technology stakeholder should develop a checklist soliciting information from the providers to assist in the evaluation of their security, and general counsel should also develop a form of agreement with the provider that contains the terms and conditions appropriate for the risks the institution is willing to accept. The institution should solicit a response to the checklist from those providers of software services that are appropriate for the institution’s needs. Because the checklist will solicit sensitive security information, the institution should be prepared to enter into a non-disclosure agreement with the provider prior to receiving its response. The responses to the checklist should then be evaluated by the individual assigned to oversee information technology security for the institution and make a recommendation to the stakeholders. If the responses are determined to create an acceptable level of risk to the institution, the vendor should be provided the institution’s form of agreement to begin negotiations.

The checklist is the first step in the institution’s due diligence of the provider and should focus on the vendor’s security policies and processes to maintain, monitor, and test the adequacy of security to protect data from disclosure to unauthorized parties.[117] The checklist should identify the type of institutional data to be shared with or stored by the provider and should specifically focus on whether it includes credit card information, health records, student records, and personally identifiable information, because federal and state law impose heightened obligations in the event of a breach. The checklist should inquire if the data will be stored outside of the United States so that the institution can determine if it would be subject to the laws of any foreign jurisdiction in the event of a breach.[118] The provider should also be asked to identify its methodology for exchanging the data, such as upload via a secure web interface, secure file transfer, etc., so that the institution can evaluate the security of the transfer. The checklist should solicit the policies of the provider (and any third party subcontractors of the provider) on data security, data storage and protection, network systems and applications, and disaster recovery; the procedures for review and updating of those policies; and policies that ensure compliance with laws applicable to PCI, HIPAA, and FERPA, so that the institution can verify the provider has a comprehensive plan for compliance. The checklist should request the provider’s SOC1 and SOC2 reports and the results of recent external audits and other tests, to determine the integrity of its system and penetration vulnerabilities. In addition, the checklist should inquire about the physical security and access restrictions to the provider’s data center, data storage area, and network systems; the provider’s response to security incidents; and the provider’s awareness training, so that the institution can evaluate the provider’s preparedness for a breach and strategies for prevention.[119]

In addition to the items on the checklist, the provider should be asked to provide its most recent audited financial statements and, if publicly traded, its 10K and 10Q reports, so that the institution may examine its assets and liabilities and the risks to it as an entity and within its industry. The stakeholders should also perform an independent assessment of the provider by conducting reference checks with existing customers, verifying the size of the provider’s customer base, and estimating the total amount of individual information stored within the provider’s services. In doing so, stakeholders will be able to project the potential losses the provider might suffer in the event of a system-wide breach and whether there is heightened risk of an attack if data is aggregated. An examination of the checklist and the additional information solicited will provide a clear picture of the potential risk of a data breach by using the vendor’s services; the vendor’s ability to prevent, detect, mitigate, and respond to a breach; and the vendor’s ability to withstand the financial impact of a significant breach.

If the institutional stakeholders are satisfied that the risks disclosed during due diligence of the provider may be adequately addressed through contract negotiation or other means, the provider should be forwarded the institution’s form of agreement.[120] While the agreement will contain standard provisions applicable to all purchase agreements, it should include the following key provisions relevant to the heightened risks associated with data security and breaches.

Specifically:

- The agreement should contain representations by the provider that service and support will meet specified levels of service, that security will be provided to prevent unauthorized access or destruction in accordance with industry standards, and that storage and backup will be maintained so that data is in retrievable form to ensure the institution’s continuity of use after contract termination.

- The agreement should clearly state that the data is owned by the institution and may be used by the provider only to deliver the services. Data that constitutes confidential information should be clearly defined in the agreement and include, at a minimum, passwords, institutional data, personally identifiable information, student records, and health records.

- The agreement should identify the actions to be taken in the event of a data breach, which should include, at a minimum, prompt notice to the institution, investigation of the cause and prevention of any reoccurrence, responsibility for all institutional losses as a result of the breach, and the granting to the institution of sole authority to determine if, when, how, and to whom notice of the breach should be sent.

- Moreover, the agreement should exclude from any limitation of liability clause the provider’s intentional or gross negligence and breach of data or confidential information.

- To adequately protect against the risk of a data breach, the agreement should require the provider to name the institution as an additional insured on the provider’s relevant insurance policies, including cyber insurance and commercial general liability insurance (which should have limits of liability of no less than $1 million per occurrence or per claim), umbrella or excess insurance, and professional liability insurance (with limits of liability of at least $10 million unless the amount of data to be stored with the provider demonstrates that a higher limit is appropriate).

- Finally, the agreement should require the destruction of the institution’s data after the agreement is terminated and certification that destruction has occurred.

Very often, a provider will seek to restrict its liability for data breaches through a limitation of liability and may be unwilling to agree to an absolute exclusion for a data breach. In that event, the institution should evaluate the potential costs it may incur and losses it may suffer as a result of a data breach by considering the total number of records and number of individuals related to the data that will be transferred to the provider. At a minimum, the institution should expect to incur, in the event of a breach, costs associated with providing notice to individuals, credit monitoring, undertaking forensic analysis to identify the cause of the breach, adequately and responsibly responding to media inquiries while protecting the institution’s reputation, and responding to or defending third party claims. Studies that examined the losses associated with responding to data breaches over the past few years estimate these costs are approximately $200 per individual or 57 cents per record, and institutions should annually reevaluate this information to determine if costs are increasing.[121] At the present time, these studies provide a guideline for institutions to negotiate secondary caps on limitation of liability clauses for claims arising out of data breaches. In the event the provider is unwilling to agree to a secondary cap that will limit its liability for data breaches in an amount that is acceptable to the institution, the purchase of cyber insurance by the institution provides an alternative for mitigating that risk.[122]

The risks inherent in storing personally identifiable information with a third party are an institutional risk, and the members of the governing body owe a fiduciary duty to the institution to be fully informed of and consent to these risks.[123] Therefore, it is recommended that the team of stakeholders present to the governing body, with participation and approval by the institution’s president, their summary of the due diligence undertaken of the selected cloud provider and the terms of the agreement, along with an explanation of how the agreement or a cyber insurance policy will mitigate the risks associated with cloud data storage. Upon approval by the governing body, the stakeholders’ work does not end. As we have seen in recent media associated with Rutgers University[124], Penn State University[125], and the Internal Revenue Service, the risk as to “if” a data breach will occur no longer exists; it is really a question of “when.” Consequently, institutions would be well served to prepare in advance of a data breach by creating a response team; implementing a response protocol and performing practice drills; establishing compliance activities to implement, monitor, review, and update data security policies; and regularly informing the governing body so it can properly discharge its fiduciary duties.[126]

C. Insurance Coverage for Cyber Security Breaches

The importance of investing the necessary time, effort, and expense to identify and establish appropriate IT solutions for an institution’s ongoing educational, research, or business operations – including cloud-based alternatives – cannot be overstated. But even after an institution completes a comprehensive due diligence process and negotiates maximum contractual protection, the vast majority of cloud-based IT opportunities will nonetheless expose the institution to additional (and potentially substantial) risk, which must be mitigated to satisfy the governors’ or trustees’ obligations to exercise sound judgment and risk management in university governance. Accordingly, an institution must pursue an in-depth analysis of its existing insurance coverage to determine whether additional coverage is required to transfer the risk of potential loss and damage in the event of a data security breach.

At the outset, it is important to recognize that reliance on existing commercial general liability (CGL) insurance to mitigate the risk of loss and damage from cyber security breaches is simply not appropriate without careful assessment, analysis, and decision-making with respect to potential risks the institution faces as a result of its data processing and data storage solutions, and the need for alternative risk mitigation and risk transfer mechanisms.[127] Recent developments regarding the availability of insurance coverage under a CGL policy for losses resulting from a cyber security breach demonstrate that the existence of coverage is far from certain. For example, the Connecticut Supreme Court recently affirmed an intermediate appellate court decision that there was no coverage available under a CGL policy for $6 million of costs incurred as a result of the loss of 130 back-up tapes that contained employment related data of more than 500,000 past and current employees.[128] Similarly, a New York trial court concluded that the insurance company had no duty to defend under a CGL policy because it was the acts of a third party – not the policyholder – that caused the release of personal information as a result of a data security breach.[129] Other courts, however, have reached the opposite result, concluding that insurance coverage was available because the disclosure of personal information was within the scope of the terms of the relevant CGL policy at issue.[130] Separately, the insurance industry has taken affirmative steps consistent with its steadfast position that the CGL policy was not intended to provide insurance for the losses and damage that may be suffered as a result of cyber security breaches, as evidenced by the introduction of specific exclusions for general liability policies that purport to eliminate coverage for liability arising out of certain data breaches.[131] Due to this “mixed bag” regarding availability, an institution relying on a CGL policy to provide insurance coverage in the event of a data breach might be successful, but its likelihood of actual success is increasingly narrow and depends on the jurisdiction and law applied to policy interpretation, the relevant facts, and the specific terms, conditions, and exclusions of the individual CGL policy.

Standalone cyber insurance policies can serve as an effective “gap filler” to cover some of the potential losses and damage that the educational institution may suffer from a data security breach that is not covered under other insurance. In general, cyber insurance provides coverage for certain losses arising out data breaches, but not all cyber insurance policies are created equal. Therefore, the terms of each policy must be carefully reviewed to verify that coverage is provided for potential losses identified in the due diligence process, including losses resulting from services of third party cloud providers. In this regard, insurance coverage is available for losses related to third party claims, notification to individuals, credit monitoring, forensic investigations, public relations and crisis management, data recovery, and government sanctions (within and outside of the United States). It is also very important to consider the appropriate geographic scope of coverage, particularly with respect to cloud computing, which, as noted above, may result in data being sent and/or stored outside a defined geographic location or area, including outside the United States. Finally, the cost of cyber insurance varies by insurer and the scope and amount of insurance desired, so focusing on the extent of necessary insurance is essential to obtaining appropriate, cost effective coverage. In addition, by keeping IT security and data policies up-to-date and ensuring that third party cloud vendors adhere to those updated policies, any requirements imposed by law, and the terms of the negotiated contracts, institutions can minimize the costs of cyber insurance coverage while also lowering potential exposure.

It should be emphasized, however, that any cyber security breach that results in wrongfully disclosed data carries hidden costs that are difficult, if not impossible, to quantify and are generally not insurable. In this regard, institutions must be concerned with damage to their endowments, enrollment, and reputations, both from those individuals directly affected and because large or sensitive breaches draw unfavorable media attention. Further, efforts directed at responding to a breach impair institutional productivity due to employee time and effort being redirected toward response instead of normal operations. Finally, a large breach erodes public trust, potentially further damaging future opportunities with prospective employees, potential students, alumni, and endowments.

In an effort to mitigate some of the risk associated with cloud-based data solutions, cyber insurance should be considered for the following categories of potential liability:

- Costs of notice, reporting, investigation, and credit monitoring in the event of a data security breach;

- Costs of defending third party lawsuits that may result from the loss of personally identifiable employee, alumni, or student information, in particular for public universities in the event the state attorney general’s office declines to defend;

- Statutory and/or regulatory investigation costs, penalties, and fees;

- Public relations and crisis management fees;

- Wrongful acts of outside vendors, consultants, or service providers;

- Data restoration costs to replace or restore a system that suffered a data security breach;

- Failure to prevent the spread of a virus or cyber attack within the institution’s network;

- Expenses required to respond to threats to harm or release data, as well as ransom payments; and

- Impairment or loss of data as the result of a criminal or fraudulent cyber incident, including theft and transfer of funds.

When evaluating the amount of coverage and the relevant terms, conditions, and exclusions, note that a recent study estimates that costs of a data breach per lost or stolen record for an educational institution could average as high as $300 per compromised record, which would quickly exhaust a $5 million policy with a breach of only 16,700 records (well below the average records per breach in 2015).[132] Moreover, educational institutions should insist on readily understandable policy wording – e.g., some policies make distinctions between “lost” and “stolen” data that can serve to exclude coverage.[133] In addition, as noted above, for an institution that was unable to secure sufficiently favorable terms with respect to a vendor’s obligations in that contract, negotiating with the insurer to include coverage for certain acts and omissions of cloud vendors may present a way to nonetheless mitigate some of that risk. Finally, since data breaches are a relatively recent phenomenon, and the costs and manner of resolving any resulting third party claims are evolving, purchasers of cloud services should reevaluate annually the limits of liability and the terms, conditions, and exclusions of their cyber insurance policy to verify that they are adequately insured.

V. Conclusion

Optimizing an educational institution’s cyber risk protection mechanisms involves a considerable commitment of resources to achieve focused preparation, analysis, and decision-making. Given the ever-increasing sophistication of cyber security threats and the expanding use of cloud-based alternatives to data processing and storage needs, educational institutions must take proactive steps to protect information and secure maximum protection against potentially crippling liability in the event of a data security breach. Even where high levels of security controls are implemented in response to high levels of risk, many educational institutions have been victims of data breaches or experienced serious system failures within the past year.[134] Appropriate cyber insurance thus should be considered an integral part of any institution’s cyber security protections. Cyber insurance is not a substitute for properly designed and implemented data security programs, but it can serve as effective supplementary protection that educational institutions and boards of trustees or governors may turn to when data security breaches occur despite best efforts at prevention.

[1] Maria C. Anderson is Associate University Counsel for Montclair State University. Luis J. Diaz is a Director and Chief Diversity Office for Gibbons P.C., and focuses his practice on a broad range of technology related matters. John T. Wolak is a Director at Gibbons who focuses his practice on a broad range of commercial and insurance related matters. David Opderbeck is a Professor at Seton Hall University’s School of Law and Director of the Gibbons Institute of Law, Science and Technology. In recognition of her extensive editorial assistance, the authors express gratitude to June Kim, Associate at Gibbons.

[2] Peter T. Lewis, Speech, CONGRESSIONAL BLACK CAUCUS FOUNDATION 15TH ANNUAL LEGISLATIVE WEEKEND, September 1985. See also, International Telecommunications Union, ITU Internet Reports: The Internet of Things, November 2005, available at: https://www.itu.int/net/wsis/tunis/newsroom/stats/The-Internet-of-Things-2005.pdf.

[3] See, infra, n. 6.

[4] Federal Trade Commission v. Wyndham Worldwide Corporation, U.S. District Court for New Jersey, Civil Action No. 2:13-CV-01887-ES-JAD.

[5] Mary Jo White, Opening Statement at SEC Roundtable on Cybersecurity, U.S. SECURITIES AND EXCHANGE COMMISSION, March 26, 2014, available at https://www.sec.gov/News/PublicStmt/Detail/PublicStmt/1370541286468.

[6] After the breach of consumer records by Target, a shareholder derivative suit was filed in 2013 in the District of Minnesota alleging that board members breached their fiduciary duties to the company by failing to maintain adequate controls to ensure the security of data affecting as many as 70 million customers who shopped at Target between November 27, 2013 and December 15, 2013. See Kulia v. Steinhafel, No. 14-CV-00203 (D. Minn. July 18, 2014). An audit commissioned through Institutional Service Shareholders recommended seven out of Target’s ten board members be removed after the data breach. See Kavita Kumar, Most of Target’s Board Members Must Go, Proxy Advisor Recommends, Star Tribune, May 29, 2014, http://www.startribune.com/most-of-target-s-board-should-go-proxy-adviser-recommends/260960251/. The data breach required Target to defend its board members under public scrutiny in response to pressure from an influential shareholder. See Kavita Kumar, Target Board Defends its Role, Before and After Data Breach, Star Tribune, June 4, 2014, http://www.startribune.com/target-board-defends-its-role-before-and-after-data-breach/261527581/. Although the Board remained intact, Target replaced its Chief Executive Officer following the breach and appointed a new Chief Information Officer. See Kavita Kumar, Target’s 10 Member Board Survives Vote of Shareholders, Star Tribune, July 2, 2014, http://www.startribune.com/june-12-target-s-board-survives-vote-of-shareholders/262727811/.

[7] Eduardo Gallardo and Andrew Kaplan, Board of Directors’ Duty of Oversight and Cybersecurity, Delaware Business Court Insider, August 20, 2014 (citing Stone v. Ritter, 911 A.2d 362, 370) (Del. 2006) and relying upon In re Caremark Int’l Derivative Litigation, 698 A.2d 959 (Del. Ch. 1996)).

[8] Foley and Lardner LLP, Taking Control of Cybersecurity: A Practical Guide for Officers and Directors, March 11, 2015, available at http://www.foley.com/taking-control-of-cybersecurity-a-practical-guide-for-officers-and-directors-03-11-2015/.

[9] See Noah G. Susskind, Cybersecurity Compliance and Risk Management Strategies: What Directors, Officers, and Managers Need to Know, 11 N.Y.U. J. L. & Bus. 573, 603 (2015).

[10] Family Educational Rights and Privacy Act, 73 Fed. Reg. 74806, 74843 (Dec. 9, 2008) (codified at 34 CFR §99) available at http://www2.ed.gov/legislation/FedRegister/finrule/2008-4/120908a.pdf.

[11] See Association of Governing Boards of Universities and Colleges, A Wake-Up Call: Enterprise Risk Management at Colleges and Universities Today at 2 (2013), available at http://agb.org/sites/agb.org/files/RiskSurvey2014.pdf.

[12] Id.

[13] Susskind, supra note 5, at 603.

[14] Salar Ghahramani, Fiduciary Duty and the Ex Officio Conundrum in Corporate Governance: The Troublesome Murkiness of the Gubernatorial Trustee’s Obligations, 10 Hastings Bus. L.J. 1, 11 (2014).

[15] Lyman P.Q. Johnson & Mark A. Sides, Corporate Governance and the Sarbanes-Oxley Act: The Sarbanes-Oxley Act and Fiduciary Duties, 30 Wm. Mitchel L. Rev. 1149, 1223-1224 (2004).

[16] See N.Y. Not-for-Profit Corp. Law § 722 (2014). See also, Vacco v. Diamandopoulos, 715 N.Y.S. 2d 269 (N.Y. Sup. Ct.,1998) (defendants, as former university trustees, were held financially accountable for mismanagement of the university’s assets and held to violate the duties of care and loyalty owed to the university). See also, N.Y. Not-for-Profit Corp. Law § 717 (directors are required to discharge their duties in good faith and “with the care an ordinarily prudent person in a like position would exercise under similar circumstances”).

[17] Joseph Anthony Valenti, Know the Mission: A Lawyer’s Duty To a Nonprofit Entity During An Internal Investigation, 22 St. Thomas L. Rev. 504, 509 (2010).

[18] Erin Kenneally & John Stanley, Beyond Whiffle-Ball Bats: Addressing Identity Crime In An Information Economy, 26 J. Marshall J. Computer & Info. L. 47, 130 (2008). Although most data breach class actions have been unsuccessful because of the plaintiffs’ inability to plead an “actual or imminent” injury that is sufficient to establish Article III standing, on December 18, 2014, the U.S. District Court for the District of Minnesota ruled that a class of consumers could proceed with a majority of their claims against Target arising from the data breach it suffered in late 2013. See In re: Target Corporation Customer Data Security Breach Litigation, MDL No. 14-2522, U.S. Dist. LEXIS 175768 (D.M.N. Dec. 18, 2014). In addition, a class action filed against AvMed, Inc. settled for $3 million (after being dismissed twice by a Florida District Court and reinstated by the U.S. Court of Appeals for the Eleventh Circuit) and did not require class members to prove actual damages, suggesting damages may not require proof or causation. See Philippa Maister, After the Breach: Plaintiffs Secure a Settlement that Doesn’t Require Proof of Damages, Corporate Counsel, July 2014, at 15.

[19] Salar Ghahramani, Fiduciary Duty and the Ex Officio Conundrum in Corporate Governance: The Troublesome Murkiness of the Gubernatorial Trustee’s Obligations, 10 Hastings Bus. L.J. 1, 7 (2014).

[20] Id. at 13.

[21] Id.

[22] Id.

[23] Id.

[24] Id.

[25] Ponemon Institute LLC, Cyber Security Incident Response: Are We as Prepared as We Think?, January 2014, available at https://www.lancope.com/sites/default/files/Lancope-Ponemon-Report-Cyber-Security-Incident-Response.pdf.

[26] The vast majority of states provide that the members of a board of a not-for-profit are held to the same standards as those applicable to the board of a for-profit corporation. See 15 Pa. Cons. Stat. § 5712 (2014). See also Ariz. Rev. Stat. § 10-830 (LexisNexis 2014), Ark. Code Ann. § 4-28-618 (2014), Cal. Corp. Code § 5231 (Deering 2014), Colo. Rev. Stat. 7-128-401 (2014), Conn. Gen. Stat. § 33-1104 (2014) (director must discharge his duties “in a manner he reasonably believes to be in the best interests of the corporation); Fla. Stat. § 617.0830 (2014), Ga. Code Ann. § 14-3-830 (2014), Haw. Rev. Stat. § 414D-149 (2014), Idaho Code Ann. § 30-3-80 (2014), Ind. Code Ann. § 23-17-13-1 (2014), Iowa Code § 504.831 (2014), Ky. Rev. Stat. Ann.§ 273.215 (LexisNexis 2014), La. Rev. Stat. Ann. § 12:226 (2014), Me. Rev. Stat. tit. 13-B, § 717 (2014), Mass. Ann. Laws. ch. 180, § 6C (LexisNexis 2014), Minn. Stat. § 317A.251 (2014), Miss. Code Ann. § 79-11-267 (2014), Mo. Rev. Stat. § 355.001 (2014), Mont. Code Ann. 35-2-416 (2014), Neb. Rev. Stat. Ann. § 21-1986 (LexisNexis 2014), Nev. Rev. Stat. Ann. § 82.221 (2014), N.J. Rev. Stat. § 15A:6-14 (2014)(trustees and members of any committee designated by the board are required to “discharge their duties in good faith and with that degree of diligence, care and skill which ordinary, prudent persons would exercise under similar circumstances in like positions”); N.M. Stat. Ann. § 53-8-25.1 (LexisNexis 2014), N.C. Gen. Stat. § 55A-8-30 (2014), N.D. Cent. Code § 10-33-45 (2014), Ohio. Rev. Code Ann. § 1702.30 (LexisNexis 2014), 15 Pa. Cons. Stat. § 5712 (2014) (a director of a not-for-profit corporation is held as a fiduciary and must perform his or her duties in good faith and with such care as a person of ordinary prudence would use under similar circumstances); R.I. Gen. Laws § 7-6-22 (2014), Tenn. Code Ann. § 48-58-301 (2014), Tex. Bus. Orgs. Code Ann. § 22.221 (2014), Utah Code Ann. § 16-6a-822 (LexisNexis 2014), Vt. Stat. Ann. tit. 11B, § 8.30 (2014), Va. Code Ann. § 13.1-870 (2014), Wash. Rev. Code Ann. § 24.03.127 (LexisNexis 2014), W. Va. Code § 31E-8-830 (2014).

[27] Id. at 15.

[28] Id.

[29] Id.

[30] Supra note 24.

[31] 20 U.S.C. § 1232g. Regulations under FERPA are codified at 34 C.F.R. § 99 (2011). In addition to FERPA, some other federal laws also implicate the privacy of educational records and should be considered during the due diligence phase. See, e.g., Individuals with Disabilities Education Act, 20 U.S.C. §§ 1400-1487; Protection of Pupil’s Rights Amendments, 20 U.S.C. § 1232h (1978); USA Patriot Act, Pub. L. 107-56 (2001); Privacy Act of 1974, 5 U.S.C. Part I, Ch. 5, Subch. 11, Sec 552; and Campus Sex Crimes Prevention Act, Pub. L. 106-386.

[32] FISMA requires that every federal agency develop and implement an agency-wide program to provide information security for the information systems and information that support the operations and assets of the agency, including those provided or managed by another agency, contractor, or other source. See 44 U.C.S.A. §3544, et. seq. This requirement is often passed through to higher education institutions as a condition of grants or contracts with federal agencies funding research. Charles H. Le Grand, Handbook for Internal Auditors §23.07 (Matthew Bender & Company Inc. 2014).

[33] See 42 U.S.C. §§ 1320d, et. seq. HIPAA required the Secretary of the U.S. Department of Health and Human Services (the “Secretary”) to adopt national standards to, inter alia, protect the privacy of individually identifiable health information and maintain administrative, technical, and physical safeguards for the security of health information.42 U.S.C. §§ 1320d-2(a)–(d). Health plans, health care clearinghouses, and health care providers who engage in standardized transactions and transmit financial and administrative claims electronically are covered entities under HIPAA and must comply with its standards and regulations. See 42 U.S.C. § 1320d-4(b).

[34] The U.S. Department of Education established a Privacy Technical Assistance Center as a resource to assist institutions with ensuring the protection of data, compliance with privacy laws, and development of confidentiality and security practices associated with technology systems. See U.S. Department of Education Privacy Technical Assistance Center, Home, http://ptac.ed.gov/. PTAC provides timely information and updated guidance on privacy, confidentiality, and security practices through a variety of resources, including training materials and opportunities to receive direct assistance with privacy, security, and confidentiality of student data systems.

[35] FERPA applies to all educational institutions that receive funding under any program administered by the Department of Education, which encompasses virtually all public schools and most private and public postsecondary institutions, including medical and other professional schools. See 20 U.S.C. § 1232g (requires higher education institutions that receive federal funds administered by the Secretary of Education to ensure certain minimum privacy protections for educational records); 34 C.F.R. § 99.1 (FERPA defines an educational institution to include “any public or private agency or institution which is the recipient of funds). See also, Jennifer C. Wasson, FERPA in the Age of Computer Logging: School Discretion at the Cost of Student Privacy?, 81 N.C.L. Rev. 1348, 1353 (2003).

[36] An educational record subject to FERPA is “directly related to a student” and “maintained by an educational agency or institution or by a party acting for such agency or institution.” See 34 C.F.R. § 99.3. Some examples of educational records include student files, student system databases kept in storage devices, or recordings and/or broadcasts. See 20 U.S.C. § 1232g(a)(4)(A).

[37] FERPA does not prohibit the use of cloud computing but requires higher education institutions to use reasonable methods to ensure the security of any information technology solutions, including cloud computing. See U.S. Department of Health & Human Services & U.S. Department of Education, Joint Guidance on the Application of the Family Educational Rights and Privacy Act (FERPA) and the Health Insurance Portability and Accountability Act of 1996 (HIPAA) to Student Health Records Nov. 2008, available at http://www.hhs.gov/ocr/privacy/hipaa/understanding/coveredentities/hipaaferpajointguide.pdf. FERPA does not, however, affirmatively require schools to implement specific procedures for cloud computing or to provide notification in event of a data breach. Notification by the institution in the event of a data breach may nonetheless be required pursuant to state law or even the institution’s own internal policies.

[38] Directory information may include “the student’s name, address, telephone listing, date and place of birth, major field of study, participation in officially recognized activities and sports, weight and height of members of athletic teams, dates of attendance, degrees and awards received, and the most recent previous educational agency or institution attended by the student.” See 20 U.S.C. § 1232g(a)(5)(A).

[39] See, e.g., 34 C.F.R. § 99.3. See also, 20 U.S.C. § 1232g(b). Information disclosed in combination with a student ID number, rather than a student name, is considered PII under FERPA and subject to heightened protection; only when an education institution removes all PII and assigns the records non-personal identifiers are disclosures to outside parties permitted without prior consent. See 20 U.S.C. § 1232g(a)(5).

[40] One exception is pragmatic, permitting disclosures in connection with confidential and anonymous studies undertaken on behalf of the educational institution. See 20 U.S.C. § 1232g(b)(1)(F) (such studies must be “for the purpose of developing, validating, or administering predictive tests, administering student aid programs, and improving instruction”); see also 34 C.F.R. § 99.31(a)(6)). This information must be destroyed when no longer needed for the purposes for which the study was conducted. See 34 C.F.R. § 99.31(a)(6)(iii)(B). The educational institution must enter into an agreement with the organization conducting the study that limits the use of the PII and requires the organization to maintain confidentiality and anonymity and to destroy the PII once it is no longer needed. See 34 C.F.R. § 99.31(a)(6)(iii)(C)(1)–(4). Another exception provided by FERPA is in connection with audits and evaluations of programs conducted by local, federal, or state officials and their authorized representatives. See 20 U.S.C. § 1232g(b)(1)–(5).

[41] 20 U.S.C. § 1232g(b)(1)(A). See also, 34 C.F.R. § 99.31(a)(1).

[42] 34 C.F.R. § 99.31(a)(1)(i)(B) (third party must (i) “perform an institutional service or function for which the…institution would otherwise use employees”; (ii) “[be] under the direct control of the…institution with respect to the use and maintenance of education records”; and (iii) be subject to certain FERPA requirements governing the use and re-disclosure of PII in educational records.

[43] 34 C.F.R. § 99.33(a)(1).

[44] 34 C.F.R. § 99.33(a)(2).

[45] HIPAA established a national health information privacy rule, which required the Secretary to issue final Standards for Privacy of Individually Identifiable Health Information, known as the Privacy Rule. See 45 C.F.R. Part 164 Subpart E. The Privacy Rule applies to health plans, health care clearinghouses, and health care providers who transmit financial and administrative transactions electronically to third parties for reimbursement of medical expenses, including medical universities that offer health care to individuals in the normal course of business or the fulfillment of academic credentials (i.e., through a university medical hospital or faculty/physician practice). See U.S. Department of Health and Human Services and U.S. Department of Education, supra note 35.

[46] “The term ‘individually identifiable health information’ means any information, including demographic information collected from an individual, that – (A) is created or received by a health care provider, health plan, employer, or health care clearinghouse; and (B) relates to the past, present, or future physical or mental health or condition of an individual, the provision of health care to an individual, or the past, present, or future payment for the provision of health care to an individual, and – (i) identifies the individual; or (ii) with respect to which there is a reasonable basis to believe that the information can be used to identify the individual.” 42 U.S.C. §1320d(6).

[47] 45 C.F.R. §160.103.

[48] 45 C.F.R. § 164.530(c). This regulation also provides specific requirements regarding the structure around such safeguards, including designating a privacy official, training the workforce, providing a mechanism for documentation of complaints, avoiding retaliation and sanctions, and other important structural components.

[49] Pursuant to the Privacy Rule, a covered entity must receive satisfactory assurances from its business associate that the business associate will appropriately safeguard the protected health information before sending PHI to the third party or having it create PHI on behalf of the covered entity. The satisfactory assurances must be in writing, whether in the form of a contract or other agreement between the covered entity and the business associate. See 45 C.F.R. §§ 164.502(e), 164.504(e), 164.532(d) and (e). For further information about business associates in the HIPAA context, visit the HHS website. Business Associates, U.S. Dep’t. of Health and Human Services, available at

http://www.hhs.gov/ocr/privacy/hipaa/understanding/coveredentities/businessassociates.html.

[50] 45 C.F.R. § 164.506.

[51] 45 C.F.R. § 164.508. Among these exceptions, PHI may be used or disclosed without patient authorization or prior agreement for public health, judicial, law enforcement, and other specifically enumerated purposes. See 45 C.F.R. § 164.512(a)-(l). “When the covered entity is required by this section to inform the individual of, or when the individual may agree to, a use or disclosure permitted by this section, the covered entity’s information and the individual’s agreement may be given orally.” See 45 C.F.R. § 164.512. For some situations that might otherwise require authorization, a covered entity may use or disclose PHI without authorization so long as the individual was given the prior opportunity to object or agree. See 45 C.F.R. § 164.510 (e.g., for use in a directory, under emergency circumstances, for use in the care of the individual, for disaster relief, or for when the person is dead).

[52] 42 U.S.C. §§ 1320d-2 and (d)(4). HHS issued the these standards in 2003.

[53] 45 C.F.R. § 164.306(a). See also, 42 U.S.C. §§ 1320d-2(d) (requiring covered entities to protect the electronic PHI against any reasonably anticipated threats or hazards to the security or integrity of such information, as well as any reasonably anticipated uses or disclosures of such information that are not permitted or required under the Privacy Rule). See also, 42 U.S.C. §§ 1320d-2(d)(2)(C) (covered entities are also responsible for ensuring compliance by their employees).

[54] Under such agreements, the third party must: implement administrative, physical, and technical safeguards that reasonably and appropriately protect the confidentiality, integrity, and availability of the covered entity’s electronic PHI; ensure that its agents and subcontractors to whom it provides the PHI do the same; and report to the covered entity any security incident of which it becomes aware. See 45 C.F.R. § 164.504 (e)(2). The contract must also authorize termination if the covered entity determines that the third party has violated a material term. See 45 C.F.R. § 164.504 (e)(2)(iii). Additionally, if a covered entity’s third party business partner violates the Security Rule, the covered entity is not liable unless it knew that the third party was engaged in a practice or pattern of activity that violated HIPAA and failed to take corrective action. See 45 C.F.R. § 164.504 (e)(1). The HITECH Act extended application of some provisions of the HIPAA Privacy and Security Rules to the business associates of HIPAA-covered entities, in particular, making those business associates subject to civil and criminal liability for improper disclosure of PHI; establishing new limits on the use of PHI for marketing and fundraising purposes; providing new enforcement authority for state attorneys general to bring suit in federal district court to enforce HIPAA violations; increasing civil and criminal penalties for HIPAA violations; requiring covered entities and business associates to notify the public and HHS of data breaches; changing certain use and disclosure rules for protected health information; and creating additional individual rights. See 78 Fed. Reg. 5566–5702.

[55] Covered entities and business associates may use any security measures that allow them to reasonably and appropriately implement the standards and implementation specifications as specified in this subpart.” See 45 C.F.R. § 164.306 (b)(1).

[56] 45 C.F.R. § 164.306 (b)(2)(i)–(iv).